The unified solution to verify,

protect & govern AI systems at scale

Developed by top-tier AI red teamers for mission-critical deployments

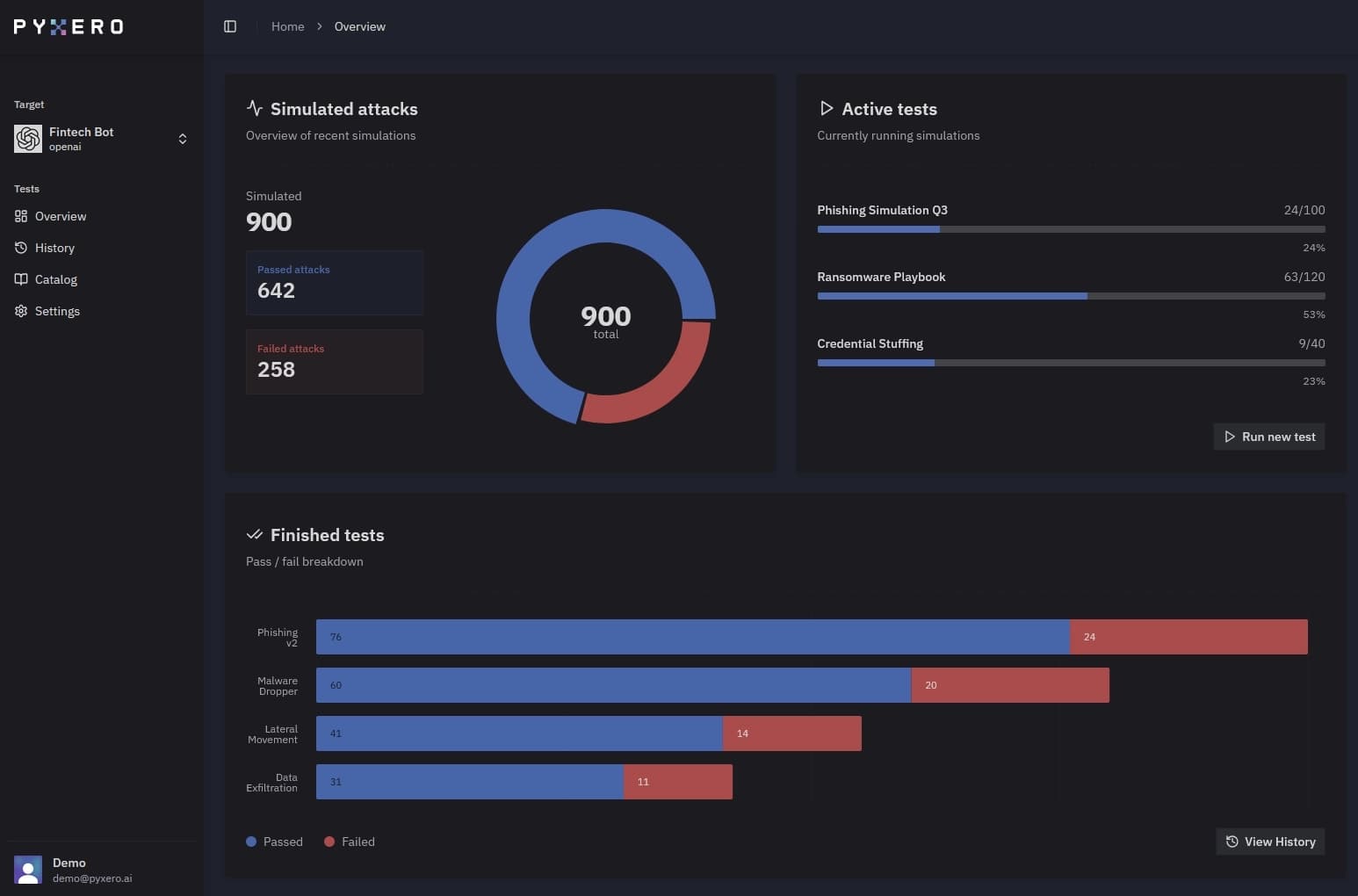

Automated AI Red Teaming

Continuously test your AI systems for security & safety risks.

Conduct large-scale security assessments and run specialized threat scenarios against your AI infrastructure from development to production.

Adaptive Remediation

Resolve vulnerabilities across AI infrastructure driven by discovered risks.

Improve your AI system security by hardening your system prompt and applying smart remediation strategies.

Multi-Layer Defense System (MLDS) for Jailbreak Prevention

Details

1. Strengthen the current input filtering system to recognize and prevent jailbreak attempts, including prompt preambles and instructions that encourage persona shifts. 2. Deploy an output monitoring mechanism that identifies responses indicative of a jailbreak (such as restricted content disclosure or compliance with prohibited requests) and routes them for human review and ongoing filter improvement.

AI Governance & Compliance

Stay compliant with AI policies and frameworks throughout your entire pipeline. Continuously adapt to evolving regulations through automated compliance mapping.

MITRE ATLAS

MITRE ATLAS is a global knowledge base of adversary tactics and techniques, focusing on the Adversarial Threat Landscape for Artificial-Intelligence Systems (ATLAS) based on real-world observations and demonstrations.

BSI Guidelines

The Bundesamt für Sicherheit in der Informationstechnik (BSI), Germany's federal cyber security agency, highlights the use of generative AI models, particularly large language models (LLMs). These models learn from existing data and can create new content, but their adoption also brings IT security risks. The BSI recommends security measures like robust testing and secure deployment to mitigate these risks.

AI threat detection and response

Monitor and neutralize prompt-based attacks, data leakage, and harmful outputs across your production AI infrastructure. Automatically detect and respond to emerging threats while maintaining system performance and user experience.

Scale AI initiatives confidently

without security trade-offs

The Pyxero Platform optimizes AI implementation speed, streamlines security operations,

and prevents critical incidents with intelligent real-time defense.

Without Pyxero:

Manual processes slows time-to-market

AI projects face delays from time-intensive testing, unclear accountability, and non-automated security procedures.

Incomplete AI attack surface awareness

Teams lack sophisticated monitoring to continuously assess, track, or validate fluid LLM behaviors and emerging threats.

Reactive compliance approaches

Adapting to new regulatory frameworks requires intensive manual coordination, creating compliance gaps and audit vulnerabilities.

Fragmented AI risk intelligence

Organizations lack unified visibility across security testing, operational monitoring, and policy enforcement – if these capabilities exist at all.

With Pyxero:

Continuous adversarial testing at enterprise scale

Deploy comprehensive, ongoing security assessments to identify threats faster and accelerate remediation across your entire AI infrastructure.

Complete AI threat landscape awareness

Gain unified oversight of your LLM ecosystem — covering inputs, autonomous agents, and operational patterns — through centralized monitoring.

Effortless policy management and audit preparation

Monitor AI governance requirements using automated intelligence and regulatory-compliant reports that scale with international standards.

All-in-one AI security orchestration

Integrate every aspect of AI protection — vulnerability testing, operational security, and compliance management — into a single dedicated solution.